Accuracy, precision, recall, and f1 are 4 common metrics in classification evaluation.

- True Negative (TN): case was negative and predicted negative

- True Positive (TP): case was positive and predicted positive

- False Negative (FN): case was positive but predicted negative

- False Positive (FP): case was negative but predicted positive

| Predicted Negative | Predicted Positive | |

|---|---|---|

| Negative Cases | TN | FP |

| Positive Cases | FN | TP |

Accuracy

The percentage of successful prediction

Precision

How many seleted items are relevant

Recall

How many relevant items are selected

Harmonic score

Sometimes precision and recall is contradictory. For example, if we only found 1 case which is correctly predicted, the precision is 100% while the recall is probably running extremely low.

Therefore, we might use Harmonic score, for merging multiple measures.

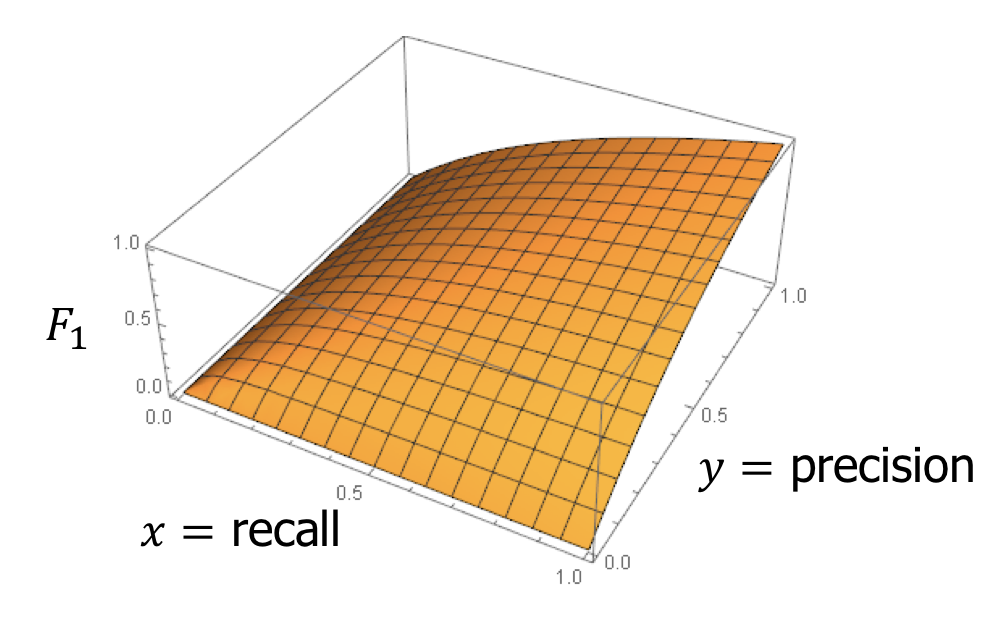

F1 score

F_1 = \frac2{\frac1{recall} + \frac1{precision}} = 2 * \frac{precision ∗ recall}{precision + recall}

f\in(0, 1]x, y\in [0, 1]$$. Either x or y increase, f increase.

Reference

How Are Precision and Recall Calculated?. Retrieved from https://www.kdnuggets.com/faq/precision-recall.html